Sivan Doveh

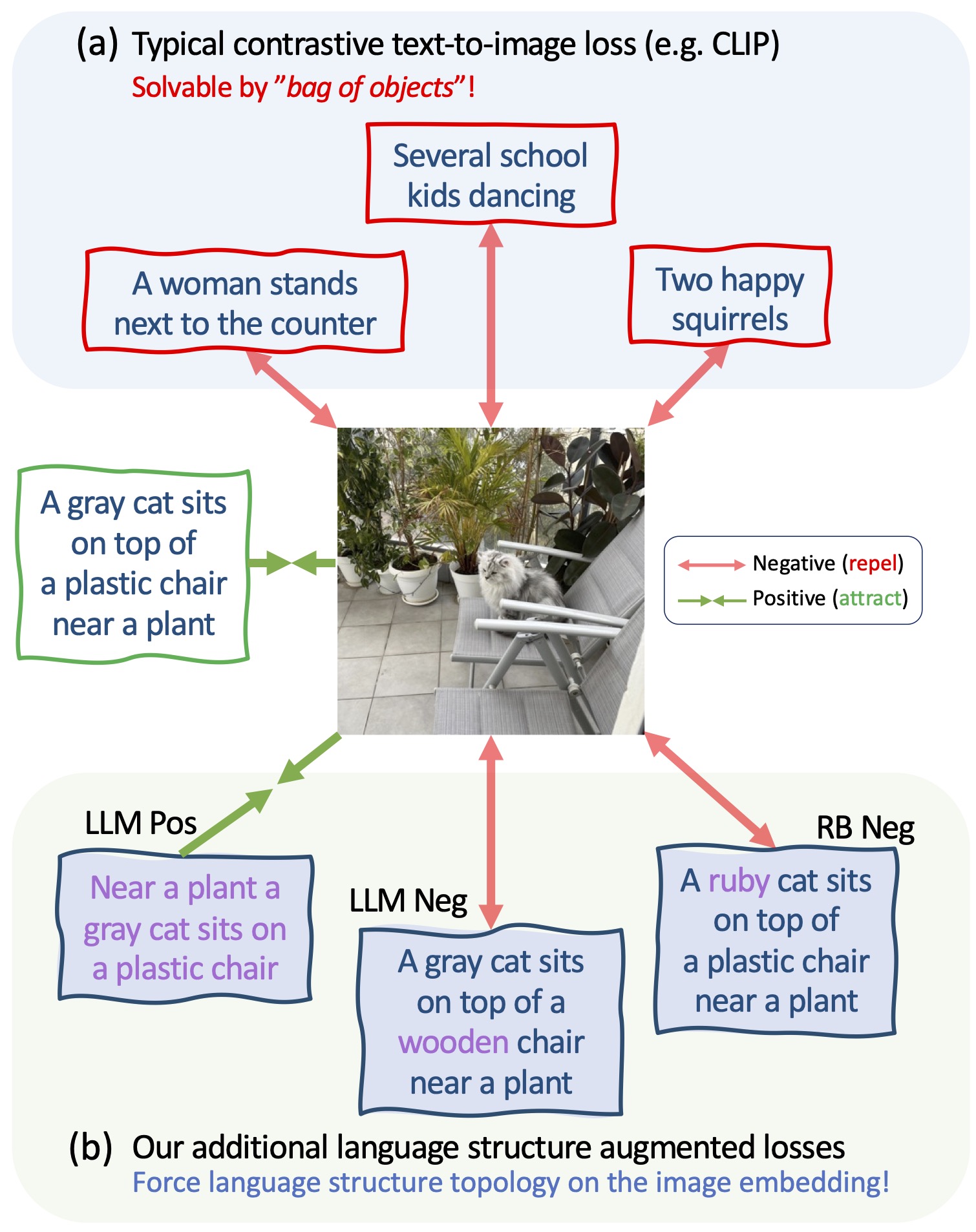

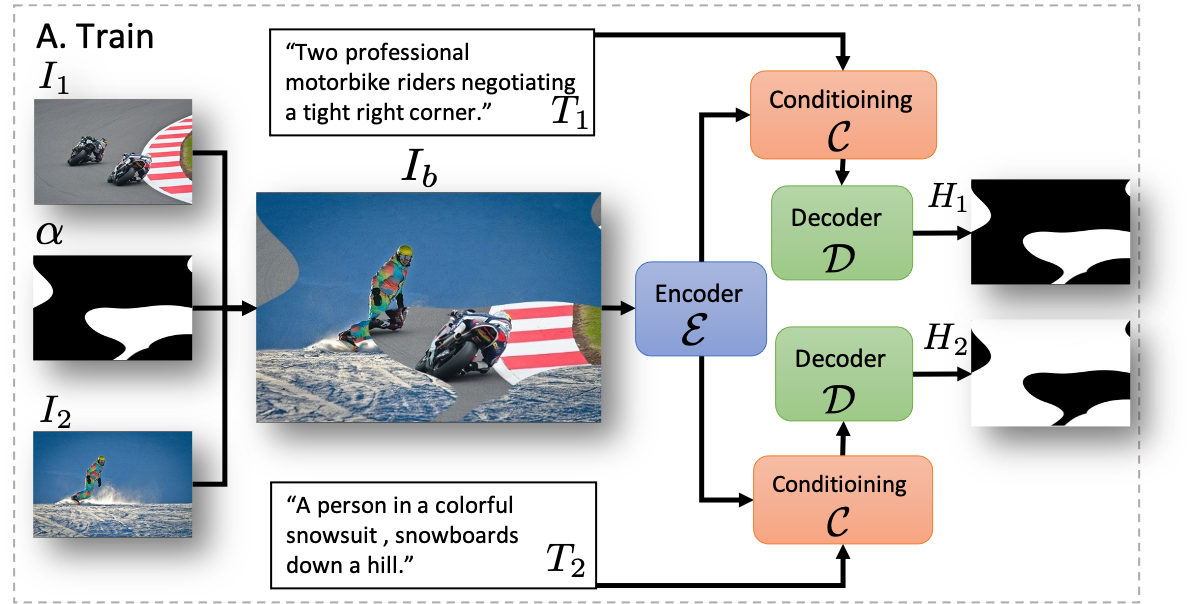

I am a student researcher at Google and a PhD student in Computer Science at the Weizmann Institute of Science, supervised by Prof. Shimon Ullman. I study how vision-language models function. Exploring their core mechanisms, strengths, and limitations - mainly by developing new data and training approaches

I earned my Master’s degree in Electrical Engineering from Tel Aviv University and my Bachelor’s degree in Electrical Engineering from Ben-Gurion University of the Negev (BGU). In parallel with my academic journey, I have also worked at Applied Materials and IBM Research.

I am actively looking for student collaborators in the area of multi-modal learning.

Contact: sivan.doveh [at] weizmann.ac.il